Harden your Serverless API (DoS and Function Orchestration)

DoS attacks and their distributed versions are among the most disastrous consequences of poor API configuration. The dynamic and elastic nature of cloud services makes this type of attack highly insidious and resource-consuming. Similarly, simple mistakes in execution flow configuration can open numerous attack avenues that can be exploited without major effort.

This post continues where the previous one left off. Let’s take a look.

Denial-of-Service (DoS) Attacks

Serverless architecture’s core features such as automated scalability and high availability present challenges that need to be overcome. Particularly, these features can dangerously hide underlying issues, such as an abnormal peak in traffic, that are otherwise easily detectable on non-serverless APIs.

Unlike traditional architectures, serverless architectures handle high demand by automatically scaling their resources and availability but also increasing their associated costs. This can easily lead to financial resource exhaustion if the problem is not mitigated on time.

What you can do…

Identify the root of the problem. Anomalies in API traffic may indicate an underlying attack or a software bug, such a function trapped in an endless loop.

Implement usage plans that specify who can access which APIs and define the volume/frequency they can access them. A usage plan utilizes API keys to identify clients and measure access to the associated API and its stages. Similarly, throttling and rate limits can restrict access once certain criteria are met. AWS provides a very detailed guide on how to implement usage plans with API Gateway.

Although throttling and rate limits are usually set by default, they can be a double-edged sword as they are set to your account level limit. This means that if you have more than one function running and one of them reaches this limit, all the remaining functions will be unavailable.

One way to fix this is to set individual rate limits per function. However, this is easier said than done as it requires constant, strict discipline on the developer side. What’s more, serverless deployment tools, such as the Serverless Framework, do not provide out-of-the-box support for individual function throttling configuration. Fortunately, a recently published Serverless Framework plugin provides great support to fulfill this need.

Finally, follow the best practices suggested by your cloud vendor. For AWS they can be found here.

Improper Control Over Function Execution Flow

Component orchestration is a key operation in distributed or microservices architectures. Each component or function must be executed in a predetermined sequence (workflow) for the system to work properly. Cloud providers offer several alternatives to implement this such as AWS Step Functions and Azure Logic Apps.

These orchestration tools offer numerous benefits such as the creation of complex sequences tasks with minimal effort, state management between executions of each component in the flow, decoupling of workflow logic and business logic, and parallel execution of individual workflow instances.

These benefits, however, also present security risks that need to be considered. For example, any alteration of a workflow, whatever minimal, can leave the system in an inconsistent state or render it completely unusable. Additionally, having a workflow that ensures all the steps (functions) are executed in a predetermined sequence offers a false sense of security. For instance, the workflow may force authentication and data validation before a critical function is invoked. However, if this function or any other component that triggers its execution lacks proper access controls, an attacker can easily skip crucial authentication/authorization steps and execute the workflow with illegal input.

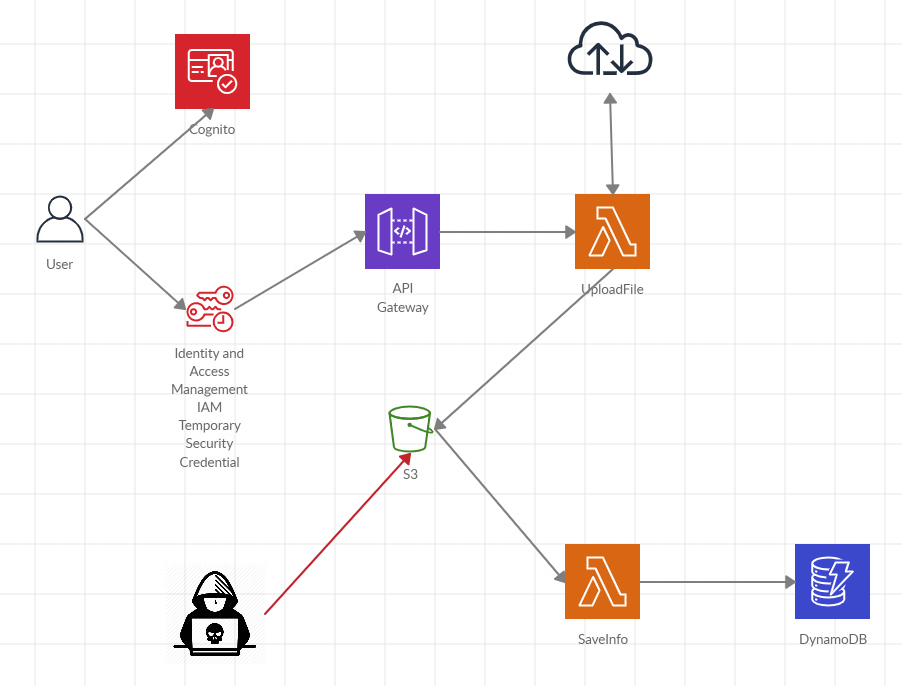

For example, take the simple app outlined below to download an image from a given URL and save its metadata to DynamoDB provided it complies with certain requirements such as size and type.

A user legitimately authenticates with Cognito and gets access to the custom function that receives the URL and performs the corresponding input validations. This function downloads the image and sends it to S3. Then, another function is triggered to get the image metadata and save it to DynamoDB.

Simple (potentially dangerous) app

So far, so good. However, if the S3 bucket is not properly secured any unauthorized user can upload a file and trigger the second function illegally. In this scenario, all validations regarding file size, file type, API permissions, API throttling are simply skipped.

What you can do…

In most cases, it is preferable to use a native component orchestration service like the ones mentioned above instead of implementing an ad-hoc workflow manually. Additionally, it is necessary to enforce proper access controls and permissions for the entire workflow and each function in particular. This goes along with the usage of a platform to operate and build serverless functions such as the Serverless Framework.

Another (potentially extreme) solution to the problem described above is to set up more granular permissions on the bucket so it can be accessed only by certain accounts or roles. See the example below.

{

"Id": "bucketPolicy",

"Statement": [

{

"Action": "s3:*",

"Effect": "Deny",

"NotPrincipal": {

"AWS": [

"arn:aws:iam::<allowed_account>:user/<USERNAME>",

"arn:aws:iam::<allowed_account>:role/<ROLENAME>"

]

},

"Resource": [

"arn:aws:s3:::<BUCKET>",

"arn:aws:s3:::<BUCKET>/*"

]

}

],

"Version": "2012-10-17"

}Wrapping Up…

The benefits and advantages provided by serverless architectures can easily be eclipsed by a set of challenges uniquely associated with them. Two of these challenges are DoS attacks and vulnerabilities related to component orchestration.

In this post, we reviewed the way serverless functions can become an open avenue to DoS attacks when proper throttling, rate limits, and access controls are missing. Specifically, the elastic nature of serverless components can lead to financial resource exhaustion in the event of a DoS or DDoS attack.

We also reviewed the benefits of service orchestration using serverless components and the problems that may arise when this key operation is not implemented properly.

As usual, we saw that using cloud-native tools, such as AWS Step Functions, in combination with proper access control is the way to go when implementing distributed applications in the cloud.

Companion repository for this post can be found at https://github.com/jpcedenog/harden-serverless-api-dos-orchestration.

Thanks for reading!

Image by Gerd Altmann from Pixabay