Streamline your data-driven API - The Power of Containers

In my previous post, I outlined the process to infuse DevOps in the development of a data-driven product. Next, we analyzed the process to create a development pipeline for data-driven projects in a repeatable and automatic way. This process also included testing capabilities in order to add an important feedback loop. We also analyzed the benefits of continuous delivery and the benefits of using managed cloud services to achieve this when compared to traditional tools and frameworks.

While having a scripted process to install and deploy a data-driven project anywhere is highly beneficial, environmental heterogeneity is still a hurdle that needs to be overcome. This is especially true for big development teams in which development environments standards are loosely defined or non-existent at all. This problem is aggravated even more when the lack of environmental standards reaches production environments.

Having little to no control over development and production environments causes unnecessary trouble during major events in a project’s life cycle. For instance, the on-boarding of new team members may take days or weeks if there is no way to provide them with a pre-made development environment. Similarly, collaboration with external actors (a very common activity in data-driven projects) is hindered by the inability to reproduce results or issues found within local environments.

Additional challenges arise when, in production environments, the output of a data-driven project needs to be published as an API. More often than not, the deployment of an API involves multiple server instances (for resilience and load balancing purposes) which need to have the same configuration, updates, patches, etc. Without a process to boot any number of pre-made and identical instances, the task to deploy a data API becomes monumental.

Using containers to reduce environmental friction

The advent of containers solved many, if not all, of the problems described above. Specifically, they were created as a more efficient, lightweight and agile replacement of virtual machines, which are already a great improvement over bare-metal servers or workstations.

The benefits offered by containers are particularly relevant in projects that adhere to CI and CD in the sense that provisioning and deployment of artifacts becomes trivial. Additionally, they contribute to the repeatability of build and deployment processes thanks to their platform independence.

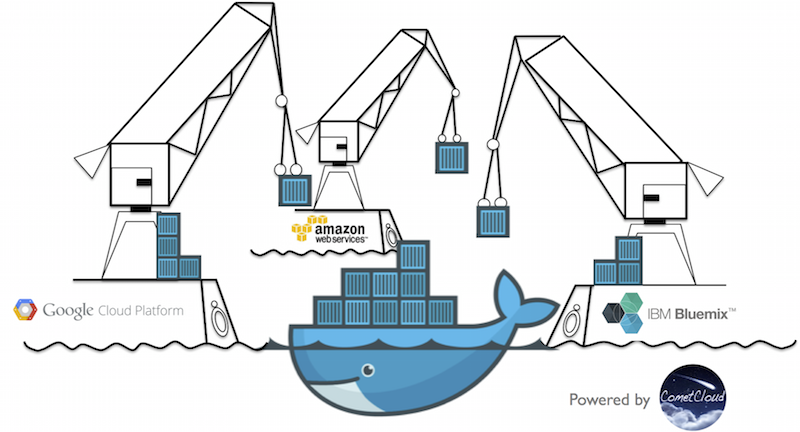

Docker Containers (Source: IBM)

In the context of DevOps, containers streamline the packing, shipping, and execution of software artifacts which are built as a portable, self-contained unit that is completely isolated from its environment. It is precisely in the execution of software artifacts where containers shine the most.

Thanks to awesome tools such as Docker Swarm, Kubernetes, and AWS ECS, containers can be orchestrated, managed, and monitored. As a consequence, high availability and application performance are easily achievable as the number of active container instances can be scaled up or down dynamically, allowing for horizontal scalability.

In the context of development of data-driven projects, containers can also be highly beneficial. Particularly, the creation of a development environment can be reduced to the execution of a script ensuring repeatability and independence in terms of underlying hardware and software ([1]). A great example of this is Docker, in which containers are created from a Dockerfile which is a simple text file that describes all the commands necessary to assemble an image in a declarative way .

Once the image is created, instances can be created from it in a completely platform-agnostic way. This allows team members to clearly specify the steps required to recreate any development environment (packages, custom libraries, OS, etc) in a repeatable and efficient manner. As a consequence, any results obtained at any point in time can be reproduced and shared with confidence. Even better, by using containers we can guarantee that production environments will also use the same set of libraries used during development.

An additional benefit of using containers, as a way to create a development environment, is the fact that the same image can be used as part of the CI and CD processes. Particularly, as seen previously, we used AWS CodePipeline to implement a simple build and deployment flow for our data project. This pipeline used AWS CodeBuild which in turn created a Docker image based on a series of parameters provided in a buildspec.yml file.

A nice feature of AWS CodeBuild is that it can also be used with pre-existing, custom Docker images. For this, the custom image must already exist in a Docker container registry (such as Amazon ECR) before it can be referenced by CodeBuild. To create a custom image in AWS ECR, all we need to do is to create it locally (by means of our Dockerfile), tag it appropriately, and then push it to the registry.

This whole process can be further scripted and automated by means of tools such as Terraform (more on this in future posts!).

Wrapping up…

As we can see, having our development environment properly defined in a Dockerfile goes a long way. Not only does it allow us to recreate and share the environment anywhere but it also allows us to improve and expedite our build and deployment processes.

Below is the Dockerfile used to recreate the environment described in my previous post.

1FROM continuumio/miniconda3

2RUN apt-get update && \

3 apt-get install -y build-essential wget git-core && \

4 pip install --upgrade pip && \

5 conda update conda && \

6 echo "channels:\n - defaults\n\n - conda-forge" > ~/.condarc

7RUN mkdir -p ~/.aws/ && \

8 echo "[default]\naws_access_key_id = FakeKey\n \

9 aws_secret_access_key = FakeKey\naws_session_token = FakeKey" > ~/.aws/credentials

10RUN git clone https://github.com/jpcedenog/data-project-devops.git /var/data-project-devops

11WORKDIR /var/data-project-devops

12RUN make setup && \

13 make install && \

14 source activate data-driven-project && \

15 pip install -r requirements_pip.txt